All Content creators, please meet your new friend BERT.

BERT is its short name, which stands for “Bidirectional Encoder Representations from Transformers”. I realize it is a complicated name, but this is exactly why you need to read this article to start to learn more.

And we are going to lightly approach it from only the written content side. As actually this Deep Learning model is for images and audio content as well as text.

What is BERT?

BERT is a machine learning algorithm adopted by Google, as a tool borrowed from the artificial intelligence world to read, scan & listen to your website’s content, or in Google terms “Crawl”.

So, in case you had an argument about whether it is a human being or a BOT who reads the content of your website?

Well, the simple answer is, it is actually a mixture of both.

And even though it is an algorithm of deep learning, which keeps on getting stronger in reading the content of your website, where do you think it got its initial learning from?

The answer is simple, Wikipedia.

How does BERT learn?

Every algorithm before it can even read text, it needs to learn first. And that’s where datasets come in. Wikipedia is almost the perfect free data source available online for content written about everything. And this data represents the perfect classroom for the neural network of the algorithm to keep on trying to read such huge amounts of content.

Those content warehouses are called “Datasets”, and of course it can come from any source, not just the most famous online Encyclopedia. Then, after a complicated AI process called NLP or Natural Language Processing, BERT basically becomes a wordsmith, a wizard of words, an oracle of content.

So, when you simply post any content on your blog, site or even social media pages, get ready to be assessed by BERT.

But What does BERT do exactly?

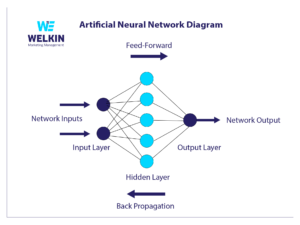

If a machine learning network is like a “human brain”, then every operation it performs is a “brain cell”. That brain is fed with input to learn from. It processes it based on the knowledge it already has, so it can learn more about it, while it is working on it, and you can call this part “thinking”. And after thinking, producing output, it goes back to think about it again to produce better output.

Basically, BERT learns from the input, and gives an output, but still, it can enhance its output by learning back from it again, and this is the simplest way to explain a Machine learning Neural Network. However, if you are not willing to settle for this explanation, well, then watch the next video at your own risk:

This is basically how the flow works:

The network gets inputs in the form of large datasets, then the algorithm works its magic with the basics it is built upon. The hidden layer is where all the processing bidirectionally happens, a feed forward, and then a back propagation, where the network continually learns better and provides more correct output, from its earlier outputs. To finally, in the case of text, it is able not only to read your content, but to assess its quality as well.

Can I enhance content for BERT?

No, is the shortest answer.

I know the answer is shocking, but for you to understand why that’s the answer, without diminishing BERT to nothing, or obsessing over it while writing like it is Google itself. Just understand that what Google is trying to do is create an unbiased AI content crawler that measures what you write from the language side.

So, your content needs to be simple, informative & well-structured.

A simplified example:

BERT-Friendly: John Brent is the E-commerce manager at www.shop.com, …

BERT-Unfriendly: John Brent is the currently successful E-commerce manager, who worked previously at Amazon.com, Noon.com, before he recently joined our team at www.shop.com, …

BERT-Friendly: John Brent, www.shop.com’s E-commerce manager, has announced …

BERT-Friendly:

- Name: John Brent

- Title: E-Commerce Manager

- Website: www.shop.com

The first example gets to the point with direct information, and it a perfect sentence.

Need a proof?

Ok, try to remove any single word from that first sentence. it becomes either grammatically incorrect, or lacking on information. Right?

The third & fourth examples are direct sentences with the fewest number of words for the earlier, and some bulleted information for the latter. Still BERT likes your content and can read it easily.

But for the second example on the other hand, you can easily detect filler words, keyword cramming and 3 website addresses. A convoluted sentence to say the least.

As a matter of fact, the “Examples” part of this very article, would not be BERT-friendly, but I had to explain it this way.

For BERT to analyze a sentence, it has to study each word, what it represents, and its dependency on other words to make sense. And these dependencies are called “Hops”

What are Hops?

Hops represent the grammatical dependency between words. And this word dependency goes both ways, not only forward. `hence the word “Bidirectional” in BERT’s full name.

Image Source: www.briggsby.com

Every arrow in the above image represents a hop, which is basically the dependency between two words in the sentence.

Keep following the arrows linking words to each other grammatically for the concept to sink in.

Now check the next two sentences and visually imagine the dependencies or hops between their words:

- You need to brew coffee on low heat to avoid over boiling it.

- Green and black tea require brewing for around two minutes.

Starting to acquire a hops mentality already, aren’t you?

What is Masking?

One of the ways BERT tries to identify the dependencies between the words, is a method called “Masking”. To put it in simple terms, the algorithm masks the words in the sentence, each at a time, to detect the dependency of the words before & after on the masked word, and also to check if this word is disposable or necessary, using Hops as the unit of measurement.

How can you benefit from BERT?

Simply move away from the keyword cramming mentality of the fading SEO world. Just write high-quality and informative copies in your niche.. That’s how BERT becomes your good friend.

But if you really want a simple recipe that will make BERT look highly at your content:

- Avoid convoluted sentences and try to provide the needed information with the least number of words, especially in the beginning of your copy.

- Use a question and answer approach, and provide the answer to the posed question in the first paragraph. You might find one of your writings appear as the 1st result on Google SERP one day, with a snippet extracted from your content.

Now, I hope that this article has introduced you to a few simple aspects of the NLP algorithm which reads & measures the quality of your written content. Just to familiarize yourself with the type of crawling the content on your website has to face these days.

Of course, this topic can not be covered in one article. And believe me when I tell you, you do not want to go in depth to understand how exactly an AI neural network learns.

But if you really do, Godspeed!

If you are a website owner, content writer or a blogger, then you have to keep BERT in mind.

Samaam Khan

Good